single_quote_str = 'Hello, World!'

double_quote_str = "Python is great."

triple_quote_str = """This is a

multiline string."""18 String and Text Manipulation

String and text manipulation are fundamental skills in data science and AI, especially given the exponential growth of unstructured data in the form of text. Emails, social media posts, customer reviews, logs, and documents are just a few examples of textual data sources that organizations analyze to gain insights. The ability to effectively process and analyze this data enables data scientists to extract meaningful patterns, trends, and sentiments that can inform business decisions and strategies.

One of the primary reasons string manipulation is crucial is the need for data cleaning and preprocessing in text analytics. Raw textual data often contains noise such as typos, irrelevant information, or inconsistent formatting. Techniques like tokenization, stemming, lemmatization, and stop-word removal are essential preprocessing steps that transform raw text into a structured format suitable for analysis. Without these steps, models built on unprocessed text data may yield inaccurate or misleading results.

Transforming text into numerical representations is another critical aspect where string manipulation plays a role. Data scientists use methods like Bag-of-Words, Term Frequency-Inverse Document Frequency (TF-IDF), and word embeddings (e.g., Word2Vec, GloVe) to convert textual data into vectors that machine learning algorithms can process. These representations capture the semantic meaning of words and documents, enabling algorithms to perform tasks such as classification, clustering, and regression on text data.

In the realm of natural language processing (NLP), advanced string manipulation techniques are employed to build models for sentiment analysis, language translation, topic modeling, and more. For instance, sentiment analysis involves parsing text to determine the author’s attitude or emotional tone, which requires nuanced handling of language, context, and even sarcasm. Effective string manipulation allows data scientists to handle these complexities and improve model performance.

Finally, the importance of string and text manipulation extends to data visualization and communication of results. Summarizing findings from textual data in a comprehensible manner often involves generating word clouds, frequency distributions, or highlighting key phrases. Mastery of string manipulation enables data scientists to present their insights effectively, making the data actionable for stakeholders. In summary, string and text manipulation are indispensable in unlocking the value hidden within unstructured textual data, making them essential skills in the field of data science.

18.1 String manipulation with Python

Whether you are processing user input, reading from files, or handling data from the web, you will frequently encounter tasks that require manipulating strings. Python provides a rich set of tools and libraries to work efficiently with text data.

In Python, a string is an immutable sequence of characters enclosed within single quotes ‘…’, double quotes “…”, or triple quotes ’’‘…’’’ or “““…”“” for multiline strings. For instance:

f-Strings

f-strings are a modern and efficient way to format strings in Python. They offer several advantages over older methods like % formatting and str.format(). By allowing expressions to be embedded directly within string literals, f-strings make code more readable and concise.

As illustrated in the following example an f-string is defined by prefixing a string literal with f or F. Expressions inside the string are placed within curly braces {}.

name = "Alice"

greeting = f"Hello, {name}!"

print(greeting) Hello, Alice!Variables can be directly embedded within f-strings using curly braces.

first_name = "John"

last_name = "Doe"

full_name = f"{first_name} {last_name}"

print(full_name) John Doef-Strings can evaluate expressions, including arithmetic operations, function calls, and method invocations.

a = 5

b = 3

result = f"{a} + {b} = {a + b}"

print(result) 5 + 3 = 8def mypower(a,b):

return a**b

a=3

b=4

message = f"the result of {a} to the power of {b} is {mypower(a,b)}"

print(message) the result of 3 to the power of 4 is 81You can access dictionary keys and list indices within f-strings.

person = {"name": "Bob", "age": 25}

print(f"{person['name']} is {person['age']} years old.")Bob is 25 years old.String methods

Python strings come with a variety of built-in methods for manipulation.

text = "Python"

print(text.lower())

print(text.upper()) # Output: PYTHON

text = " Hello, World! "

print(text.strip()) # Output: Hello, World!

print(text.lstrip()) # Output: 'Hello, World! '

print(text.rstrip()) # Output: ' Hello, World!'python

PYTHON

Hello, World!

Hello, World!

Hello, World!You surely will need to find, replace and count substrings in your projects,

text = "Hello, World!"

index = text.find("World")

print(index) # Output: 77new_text = text.replace("World", "Python")

print(new_text) # Output: Hello, Python!Hello, Python!count = text.count('l')

print(count) # Output: 33Splitting and joining Strings are also common tasks required in data processing.

words = "Python is fun".split(' ')

print(words) # Output: ['Python', 'is', 'fun']['Python', 'is', 'fun']joined_text = ' '.join(['Python', 'is', 'fun'])

print(joined_text) # Output: Python is funPython is fun18.2 String manipulation with Pandas

Pandas offers similar methods to manipulate strings and text.

Given the following DataFrame:

import pandas as pd

# Sample DataFrame

data = {

'Name': ['Alice Smith', 'bob Johnson', 'CHARLIE', 'david lee', 'Eva Wang','Beaniee Greenlee'],

'Email': ['alice.smith@example.com', 'bob_johnson@example.net', 'charlie@example.org', 'davidlee@example.com', None,'beaniee@gmail.com'],

'Age': [25, 38, 29, 42, 30,45]

}

df = pd.DataFrame(data)

df| Name | Age | ||

|---|---|---|---|

| 0 | Alice Smith | alice.smith@example.com | 25 |

| 1 | bob Johnson | bob_johnson@example.net | 38 |

| 2 | CHARLIE | charlie@example.org | 29 |

| 3 | david lee | davidlee@example.com | 42 |

| 4 | Eva Wang | None | 30 |

| 5 | Beaniee Greenlee | beaniee@gmail.com | 45 |

You can apply several methods to transform strings as needed, for instance:

df['Name_upper'] = df['Name'].str.upper()

df[['Name', 'Name_upper']]| Name | Name_upper | |

|---|---|---|

| 0 | Alice Smith | ALICE SMITH |

| 1 | bob Johnson | BOB JOHNSON |

| 2 | CHARLIE | CHARLIE |

| 3 | david lee | DAVID LEE |

| 4 | Eva Wang | EVA WANG |

| 5 | Beaniee Greenlee | BEANIEE GREENLEE |

df['Name_title'] = df['Name'].str.title()

df[['Name', 'Name_title']]| Name | Name_title | |

|---|---|---|

| 0 | Alice Smith | Alice Smith |

| 1 | bob Johnson | Bob Johnson |

| 2 | CHARLIE | Charlie |

| 3 | david lee | David Lee |

| 4 | Eva Wang | Eva Wang |

| 5 | Beaniee Greenlee | Beaniee Greenlee |

# Replace spaces with underscores in 'Name' column

df['Name_underscore'] = df['Name'].str.replace(' ', '_')

print(df[['Name', 'Name_underscore']]) Name Name_underscore

0 Alice Smith Alice_Smith

1 bob Johnson bob_Johnson

2 CHARLIE CHARLIE

3 david lee david_lee

4 Eva Wang Eva_Wang

5 Beaniee Greenlee Beaniee_Greenlee# Replace spaces with underscores in 'Name' column

df['Name_underscore'] = df['Name'].str.replace(' ', '_')

print(df[['Name', 'Name_underscore']]) Name Name_underscore

0 Alice Smith Alice_Smith

1 bob Johnson bob_Johnson

2 CHARLIE CHARLIE

3 david lee david_lee

4 Eva Wang Eva_Wang

5 Beaniee Greenlee Beaniee_GreenleeSplitting and concatenating strings can be performed with Series.str.split() and Series.str.concat() respectively.

df['Name_split'] = df['Name'].str.split(' ')

df[['Name', 'Name_split']]| Name | Name_split | |

|---|---|---|

| 0 | Alice Smith | [Alice, Smith] |

| 1 | bob Johnson | [bob, Johnson] |

| 2 | CHARLIE | [CHARLIE] |

| 3 | david lee | [david, lee] |

| 4 | Eva Wang | [Eva, Wang] |

| 5 | Beaniee Greenlee | [Beaniee, Greenlee] |

# Expand the split names into separate columns

df[['First_Name', 'Last_Name']] = df['Name'].str.split(' ', expand=True)

df[['Name', 'First_Name', 'Last_Name']]| Name | First_Name | Last_Name | |

|---|---|---|---|

| 0 | Alice Smith | Alice | Smith |

| 1 | bob Johnson | bob | Johnson |

| 2 | CHARLIE | CHARLIE | None |

| 3 | david lee | david | lee |

| 4 | Eva Wang | Eva | Wang |

| 5 | Beaniee Greenlee | Beaniee | Greenlee |

# Concatenate 'First_Name' and 'Last_Name' with a hyphen

df['Name_hyphen'] = df['First_Name'].str.cat(df['Last_Name'], sep='-')

df[['First_Name', 'Last_Name', 'Name_hyphen']]| First_Name | Last_Name | Name_hyphen | |

|---|---|---|---|

| 0 | Alice | Smith | Alice-Smith |

| 1 | bob | Johnson | bob-Johnson |

| 2 | CHARLIE | None | NaN |

| 3 | david | lee | david-lee |

| 4 | Eva | Wang | Eva-Wang |

| 5 | Beaniee | Greenlee | Beaniee-Greenlee |

In some instances you might need to apply your own functions, for instance:

# Define a custom function to reverse strings

def reverse_string(s):

if pd.isnull(s):

return s

return s[::-1]

# Apply the custom function to 'Name'

df['Name_reversed'] = df['Name'].apply(reverse_string)

print(df[['Name', 'Name_reversed']]) Name Name_reversed

0 Alice Smith htimS ecilA

1 bob Johnson nosnhoJ bob

2 CHARLIE EILRAHC

3 david lee eel divad

4 Eva Wang gnaW avE

5 Beaniee Greenlee eelneerG eeinaeBThe following table compiles a list of Pandas methods you can use to manipulate strings and text with Pandas.

| Method | Description |

|---|---|

str.lower() |

Converts strings to lowercase. |

str.upper() |

Converts strings to uppercase. |

str.title() |

Converts strings to title case (capitalizes the first letter of each word). |

str.capitalize() |

Capitalizes the first letter of the string. |

str.strip() |

Removes leading and trailing whitespaces. |

str.lstrip() |

Removes leading whitespaces. |

str.rstrip() |

Removes trailing whitespaces. |

str.replace(pattern, replacement) |

Replaces occurrences of a substring or regex pattern with another string. |

str.contains(pattern) |

Returns True if a string contains a substring or matches a regex pattern. |

str.startswith(pattern) |

Returns True if the string starts with the specified pattern. |

str.endswith(pattern) |

Returns True if the string ends with the specified pattern. |

str.match(pattern) |

Checks if the string matches a regex pattern from the start. |

str.fullmatch(pattern) |

Checks if the entire string matches a regex pattern. |

str.extract(pattern) |

Extracts the first match of a regex pattern. |

str.extractall(pattern) |

Extracts all matches of a regex pattern. |

str.findall(pattern) |

Finds all occurrences of a regex pattern. |

str.len() |

Returns the length of the string. |

str.split(separator) |

Splits the string by the specified separator or regex pattern. |

str.rsplit(separator) |

Splits the string from the right by the specified separator or regex pattern. |

str.join(separator) |

Joins elements of a list with the specified separator. |

str.get(index) |

Retrieves the element at the specified position from a list-like string. |

str.pad(width) |

Pads the string with spaces or a specified character to reach a specified width. |

str.zfill(width) |

Pads the string with zeros to reach a specified width. |

str.repeat(times) |

Repeats the string a specified number of times. |

str.wrap(width) |

Wraps long strings into lines of the specified width. |

str.cat(others) |

Concatenates strings with another Series or list-like object. |

str.count(pattern) |

Counts the occurrences of a substring or regex pattern in the string. |

18.2.1 Regular Expressions

Regular expressions (called REs, or regexes, or regex patterns) are essentially a tiny, highly specialized programming language that allows you to define patterns for string matching. REGEX enables you to capture complex patterns of strings such as internet URLS, postal codes embedded in text, email addresses and so on. You can also use regular expressions to modify a string or to split it apart in various ways.

Regular expressions are used to operate on strings. The most common task is matching characters.

Matching characters

Most letters and characters will simply match themselves. For example, the regular expression test will match the string test exactly.

Some characters, however, are special metacharacters and do not match to themselves. Instead, they signal that some out-of-the-ordinary thing should be matched, or they affect other portions of the regular expression by repeating them or changing their meaning. The following table list REGEX metacharacters you can use to perform character matching.

| Metacharacter | Description | Example |

|---|---|---|

. |

Matches any character except a newline | a.b matches aab, a3b, but not ab |

^ |

Anchors the match at the start of a string | ^Hello matches Hello world, but not World Hello |

$ |

Anchors the match at the end of a string | world$ matches Hello world, but not worlds |

? |

Matches 0 or 1 repetition of the preceding element | ab?c matches ac, abc |

[] |

Matches any single character in the brackets (character class) | [aeiou] matches any vowel in cat, dog |

| |

Alternation; matches either the expression on the left or right | cat|dog matches cat or dog |

() |

Groups expressions and creates capturing groups | (abc)+ matches abc, abcabc |

\ |

Escapes a metacharacter or denotes a special sequence | \. matches a literal dot, \d matches a digit |

\d |

Matches any digit (equivalent to [0-9]) |

\d{3} matches 123, 456 |

\D |

Matches any non-digit character | \D matches a, b, !, # |

\w |

Matches any alphanumeric character (equivalent to [a-zA-Z0-9_]) |

\w+ matches abc123, hello_world |

\W |

Matches any non-alphanumeric character | \W matches !, @, # |

\s |

Matches any whitespace character (space, tab, newline) | \s+ matches spaces, tabs, etc. |

\S |

Matches any non-whitespace character | \S+ matches any non-space text |

The following code illustrates the application of a regular expression to extract the first word in the column ‘Name’.

df['First_Name'] = df['Name'].str.extract(r'^(\w+)')

df[['Name','First_Name']]| Name | First_Name | |

|---|---|---|

| 0 | Alice Smith | Alice |

| 1 | bob Johnson | bob |

| 2 | CHARLIE | CHARLIE |

| 3 | david lee | david |

| 4 | Eva Wang | Eva |

| 5 | Beaniee Greenlee | Beaniee |

A quite common use is the processing of email related information, for instance:

df['Domain'] = df['Email'].str.extract(r'(?<=@)([\w.]+)')

df[['Email','Domain']]| Domain | ||

|---|---|---|

| 0 | alice.smith@example.com | example.com |

| 1 | bob_johnson@example.net | example.net |

| 2 | charlie@example.org | example.org |

| 3 | davidlee@example.com | example.com |

| 4 | None | NaN |

| 5 | beaniee@gmail.com | gmail.com |

df['Username'] = df['Email'].str.extract(r'^([\w.]+)')Finding the right regular expression can be quite a frustrating experience. The good news is that you can rely on generative AI to help you with this.

For instance to find a REGEX to capture internet URLs you can submit the following prompt:

give me a regex to extract URLs from text

which will return something similar to:

https?://(?:www.)?[-]+(?:.[-]+)+(?:[.,@?^=%&:/~+#-]*[@?^=%&/~+#-])?

Repeating Things

Being able to match varying sets of characters is the first thing regular expressions can do that isn’t already possible with Python’s native methods. Another capability is that you can specify that portions of the regular expressions must be repeated a certain number of times.

The * metacharacter is used to match zero or more repetitions of the preceding element, for example, ca*t will match ‘ct’ (0 ‘a’ characters), ‘cat’ (1 ‘a’), ‘caaat’ (3 ‘a’ characters), and so forth.

| Metacharacter | Description | Example |

|---|---|---|

* |

Matches 0 or more repetitions of the preceding element | ab*c matches ac, abc, abbc |

+ |

Matches 1 or more repetitions of the preceding element | ab+c matches abc, abbc, but not ac |

{m,n} |

Matches between m and n repetitions of the preceding element |

a{2,4} matches aa, aaa, aaaa |

The following examples illustrates some uses of REGEX to process patterns repeatedly.

# Use regex with the '+' metacharacter to find words with one or more 'e's

df['Words_with_e'] = df['Name'].str.findall(r'\b\w*e+\w*\b')

df[['Name','Words_with_e']]| Name | Words_with_e | |

|---|---|---|

| 0 | Alice Smith | [Alice] |

| 1 | bob Johnson | [] |

| 2 | CHARLIE | [] |

| 3 | david lee | [lee] |

| 4 | Eva Wang | [] |

| 5 | Beaniee Greenlee | [Beaniee, Greenlee] |

# Count occurrences of repeating 'e'

df['Repeating_e_count'] = df['Name'].str.count(r'(e{2,})')

df[['Name','Repeating_e_count']]| Name | Repeating_e_count | |

|---|---|---|

| 0 | Alice Smith | 0 |

| 1 | bob Johnson | 0 |

| 2 | CHARLIE | 0 |

| 3 | david lee | 1 |

| 4 | Eva Wang | 0 |

| 5 | Beaniee Greenlee | 3 |

Say you need to extract all URLs from the following Pandas DataFrame:

import pandas as pd

# Sample DataFrame with text containing URLs

data = {

'Text': [

'Visit https://www.example.com for more details.',

'Check out http://example.org or www.testsite.com now!',

'No links here!',

'Another site: https://subdomain.example.co.uk and a shorter one www.short.com.',

'Some more links: http://longexample.com/page,another link is:, https://secure.example.com/path'

]

}

pd.set_option('display.max_colwidth', None)

df = pd.DataFrame(data)

df| Text | |

|---|---|

| 0 | Visit https://www.example.com for more details. |

| 1 | Check out http://example.org or www.testsite.com now! |

| 2 | No links here! |

| 3 | Another site: https://subdomain.example.co.uk and a shorter one www.short.com. |

| 4 | Some more links: http://longexample.com/page,another link is:, https://secure.example.com/path |

You can submit the following prompt:

Given the previous dataframe df, give me code using REGEX to extract all URLs from the dataframe

which will return something similar to:

# Regex to match URLs

url_pattern = r'(https?://[^\s,]+|www\.[^\s,]+)'

# Extract all URLs using the regex pattern

df['URLs'] = df['Text'].str.findall(url_pattern)

df[['Text','URLs']]| Text | URLs | |

|---|---|---|

| 0 | Visit https://www.example.com for more details. | [https://www.example.com] |

| 1 | Check out http://example.org or www.testsite.com now! | [http://example.org, www.testsite.com] |

| 2 | No links here! | [] |

| 3 | Another site: https://subdomain.example.co.uk and a shorter one www.short.com. | [https://subdomain.example.co.uk, www.short.com.] |

| 4 | Some more links: http://longexample.com/page,another link is:, https://secure.example.com/path | [http://longexample.com/page, https://secure.example.com/path] |

18.3 Text manipulation with Generative AI

Generative AI has revolutionized how we approach text processing tasks by enabling systems to create, modify, and understand natural language in ways that were previously unimaginable. Traditional text processing methods typically involved rule-based approaches or statistical models to understand, manipulate, or generate text. However, with the advent of large language models (LLMs), text processing has become far more sophisticated and capable of:

- Text Generation: These models can generate original, coherent, and meaningful text based on prompts. Applications include writing assistance, content creation, dialogue generation in chatbots, and storytelling.

- Summarisation: Generative AI can summarize large documents or articles, capturing the main points while maintaining fluency. Summarisation can be extractive (selecting key phrases from the original text) or abstractive (creating new sentences that encapsulate the main ideas).

- Paraphrasing: AI models can rewrite text in different styles or simplify complex sentences without losing meaning, which is useful in education, content rewriting, or providing multiple versions of information.

- Text Translation: Modern models can understand and generate text in multiple languages, enabling seamless translation between languages while maintaining nuances and context.

- Text Completion: Generative models are skilled at completing text based on a few initial words or sentences, whether for simple sentence completions or more complex document extensions.

- Sentiment Analysis and Understanding: While often used in classification, generative AI also helps interpret sentiments and emotions within text, producing nuanced insights into the tone, mood, or intent of a passage.

- Code Generation: Besides natural language, generative AI can write programming code based on textual descriptions, facilitating tasks such as automating script writing or assisting developers in debugging and code completion.

The following examples require you to signup and get an API Key from OpenAI. Refer to OpenAI documentation

Text Generation

Text generation can be quite useful in marketing contexts, for instance to generate campaigns or media communications.

For illustration purposes let’s assume we have the following list of products and we need to create a marketing campaign for each product:

| productCode | productDescription | |

|---|---|---|

| 0 | P001 | Laptop - 15 inch, 8GB RAM, 256GB SSD |

| 1 | P002 | Smartphone - 128GB, 6GB RAM, Dual Camera |

| 2 | P003 | Wireless Headphones - Noise Cancelling, Over-Ear |

The following code illustrates the application of Generative AI services to generate our marketing campaigns

import openai

from openai import OpenAI

import os

# Replace 'your-api-key' with your actual OpenAI API key

# Set the API key as an environment variable

os.environ["OPENAI_API_KEY"] = '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'

client = OpenAI()

def marketing_campaign(text):

prompt = f"Create a two sentence marketing campaign for the following text: \n\n{text}\n\n"

response = client.chat.completions.create(

model="gpt-3.5-turbo", # or "gpt-4"

messages=[

{"role": "user", "content": prompt}

]

)

campaign = response.choices[0].message.content.strip()

return campaign

# Apply the translation function to the 'text' column

df['productCampaign'] = df['productDescription'].apply(lambda x: marketing_campaign(x))

df| productCode | productDescription | productCampaign | |

|---|---|---|---|

| 0 | P001 | Laptop - 15 inch, 8GB RAM, 256GB SSD | Experience lightning-fast performance and a crystal-clear display with our 15-inch laptop featuring 8GB of RAM and a 256GB SSD. Elevate your productivity and efficiency with our powerful and sleek laptop - the perfect combination of speed and storage. |

| 1 | P002 | Smartphone - 128GB, 6GB RAM, Dual Camera | "Unlock unlimited possibilities with our powerful smartphone featuring 128GB storage and 6GB RAM for seamless multitasking. Capture every moment in stunning detail with the dual camera capabilities that bring your photos to life." |

| 2 | P003 | Wireless Headphones - Noise Cancelling, Over-Ear | Immerse yourself in your favorite tunes without any outside distractions with our noise-cancelling over-ear wireless headphones. Experience superior sound quality and unmatched comfort with our premium headphones. |

Text Translation

For illustration purposes say we have some medical records that need to be translated into Spanish, the following code uses OpenAI’s services to perform the translation:

| NameSurname | MedicalCode | Diagnosis | |

|---|---|---|---|

| PatientCode | |||

| 101 | John Doe | M123 | Patient has been diagnosed with hypertension, characterized by consistently elevated blood pressure levels over time, requiring ongoing monitoring and lifestyle changes. |

| 102 | Jane Smith | A456 | Patient suffers from chronic inflammatory disease, a chronic respiratory condition causing difficulty in breathing, wheezing, and shortness of breath, triggered by allergens or exercise. |

| 103 | Michael Brown | B789 | Patient has Type 2 Diabetes, a chronic condition that affects the way the body processes blood sugar, often requiring medication and changes in diet. |

| 104 | Emily Davis | C012 | Patient experiences chronic back pain, primarily in the lower back region, due to long-term musculoskeletal issues that impact daily functioning. |

| 105 | James Wilson | D345 | Patient frequently suffers from migraines, characterized by intense headaches, often accompanied by nausea, sensitivity to light, and sometimes visual disturbances. |

import openai

from openai import OpenAI

import os

# Replace 'your-api-key' with your actual OpenAI API key

# Set the API key as an environment variable

os.environ["OPENAI_API_KEY"] = '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'

client = OpenAI()

def translate_text(text, target_language):

prompt = f"Translate the following text to {target_language}:\n\n{text}\n\nTranslation:"

response = client.chat.completions.create(

model="gpt-3.5-turbo", # or "gpt-4"

messages=[

{"role": "user", "content": prompt}

]

)

translation = response.choices[0].message.content.strip()

return translation

# Specify the target language for translation

target_language = "Spanish" # Change to your desired language

# Apply the translation function to the 'text' column

df['Diagnosis_Translated'] = df['Diagnosis'].apply(lambda x: translate_text(x, target_language))

df| NameSurname | MedicalCode | Diagnosis | Diagnosis_Translated | |

|---|---|---|---|---|

| PatientCode | ||||

| 101 | John Doe | M123 | Patient has been diagnosed with hypertension, characterized by consistently elevated blood pressure levels over time, requiring ongoing monitoring and lifestyle changes. | El paciente ha sido diagnosticado con hipertensión, caracterizada por niveles constantemente elevados de presión arterial a lo largo del tiempo, que requiere monitoreo continuo y cambios en el estilo de vida. |

| 102 | Jane Smith | A456 | Patient suffers from chronic inflammatory disease, a chronic respiratory condition causing difficulty in breathing, wheezing, and shortness of breath, triggered by allergens or exercise. | El paciente sufre de una enfermedad inflamatoria crónica, una condición respiratoria crónica que causa dificultad para respirar, sibilancias y falta de aire, desencadenada por alérgenos o ejercicio. |

| 103 | Michael Brown | B789 | Patient has Type 2 Diabetes, a chronic condition that affects the way the body processes blood sugar, often requiring medication and changes in diet. | El paciente tiene diabetes tipo 2, una condición crónica que afecta la forma en que el cuerpo procesa el azúcar en la sangre, a menudo requiere medicamentos y cambios en la dieta. |

| 104 | Emily Davis | C012 | Patient experiences chronic back pain, primarily in the lower back region, due to long-term musculoskeletal issues that impact daily functioning. | El paciente experimenta dolor crónico de espalda, principalmente en la región lumbar, debido a problemas musculoesqueléticos a largo plazo que afectan su funcionamiento diario. |

| 105 | James Wilson | D345 | Patient frequently suffers from migraines, characterized by intense headaches, often accompanied by nausea, sensitivity to light, and sometimes visual disturbances. | El paciente sufre frecuentemente de migrañas, caracterizadas por intensos dolores de cabeza, a menudo acompañados de náuseas, sensibilidad a la luz y a veces trastornos visuales. |

Text Summarisation

We might want to have medical information summarized and redacted towards a non-medical audience (e.g. patients) as shown in the following example.

import openai

from openai import OpenAI

import os

# Replace 'your-api-key' with your actual OpenAI API key

# Set the API key as an environment variable

os.environ["OPENAI_API_KEY"] = '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'

client = OpenAI()

def text_summarizer(text):

prompt = f"Summarize the following text in one sentence, make it readable to a non-medical audience: \n\n{text}\n\n"

response = client.chat.completions.create(

model="gpt-4", # or "gpt-4"

messages=[

{"role": "user", "content": prompt}

]

)

summary = response.choices[0].message.content.strip()

return summary

df['DiagnosisSimplified'] = df['Diagnosis'].apply(lambda x: text_summarizer(x))

df[['Diagnosis','DiagnosisSimplified']]| Diagnosis | DiagnosisSimplified | |

|---|---|---|

| PatientCode | ||

| 101 | Patient has been diagnosed with hypertension, characterized by consistently elevated blood pressure levels over time, requiring ongoing monitoring and lifestyle changes. | The patient has high blood pressure that needs regular check-ups and changes in daily habits. |

| 102 | Patient suffers from chronic inflammatory disease, a chronic respiratory condition causing difficulty in breathing, wheezing, and shortness of breath, triggered by allergens or exercise. | The patient has a long-term breathing condition that makes it hard to breathe, causes wheezing, and shortness of breath, usually set off by things like allergens or physical exercise. |

| 103 | Patient has Type 2 Diabetes, a chronic condition that affects the way the body processes blood sugar, often requiring medication and changes in diet. | The patient has Type 2 Diabetes, a long-term disease that impacts how their body handles sugar, often needing drugs and dietary changes. |

| 104 | Patient experiences chronic back pain, primarily in the lower back region, due to long-term musculoskeletal issues that impact daily functioning. | The person is suffering from ongoing lower back pain because of long-term body issues that affect their everyday activities. |

| 105 | Patient frequently suffers from migraines, characterized by intense headaches, often accompanied by nausea, sensitivity to light, and sometimes visual disturbances. | The patient often experiences severe migraines, which include intense headaches, feeling sick, sensitivity to light, and occasional vision issues. |

Sentiment Analysis and Understanding

Generative AI is becoming better at understanding text, this is useful for instance to automate customer reviews as shown in the following example:

| MovieName | MovieReview | |

|---|---|---|

| 0 | Inception | Inception is a brilliant masterpiece with mind-bending concepts and stunning visuals. A must-watch for fans of complex narratives and sci-fi thrillers. |

| 1 | The Room | The Room is a disaster of a movie, filled with awkward dialogue, strange acting, and nonsensical plotlines. It’s so bad it has gained a cult following. |

| 2 | The Dark Knight | The Dark Knight delivers an intense, gripping story with outstanding performances, particularly by Heath Ledger as the Joker. It redefined superhero films. |

| 3 | Cats | Cats is a visually bizarre and confusing adaptation with poor CGI and disjointed storytelling. It’s hard to sit through without cringing. |

| 4 | Avengers: Endgame | Avengers: Endgame is a spectacular conclusion to the Marvel saga, filled with epic action scenes, emotional depth, and fan-pleasing moments. |

import openai

from openai import OpenAI

import os

# Replace 'your-api-key' with your actual OpenAI API key

# Set the API key as an environment variable

os.environ["OPENAI_API_KEY"] = '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'

client = OpenAI()

def sentiment_analysis(text):

prompt = f" Read the following text and determine its sentiment. Sentiment can be negative, neutral, positive. Just provide the sentiment {text}\n\n"

response = client.chat.completions.create(

model="gpt-4", # or "gpt-4"

messages=[

{"role": "user", "content": prompt}

]

)

sentiment = response.choices[0].message.content.strip()

return sentiment

df['MovieSentiment'] = df['MovieReview'].apply(lambda x: sentiment_analysis(x))

df[['MovieReview','MovieSentiment']]| MovieReview | MovieSentiment | |

|---|---|---|

| 0 | Inception is a brilliant masterpiece with mind-bending concepts and stunning visuals. A must-watch for fans of complex narratives and sci-fi thrillers. | Positive |

| 1 | The Room is a disaster of a movie, filled with awkward dialogue, strange acting, and nonsensical plotlines. It’s so bad it has gained a cult following. | Negative |

| 2 | The Dark Knight delivers an intense, gripping story with outstanding performances, particularly by Heath Ledger as the Joker. It redefined superhero films. | Positive |

| 3 | Cats is a visually bizarre and confusing adaptation with poor CGI and disjointed storytelling. It’s hard to sit through without cringing. | Negative |

| 4 | Avengers: Endgame is a spectacular conclusion to the Marvel saga, filled with epic action scenes, emotional depth, and fan-pleasing moments. | Positive |

18.4 Text manipulation with BeautifulSoup

BeautifulSoup is a Python library for pulling data out of HTML and XML files. It works with your favorite parser to provide idiomatic ways of navigating, searching, and modifying the parse tree. BeautifulSoup is specially relevant to any aspiring data scientist or AI practitioner as it enables web scraping.

The best approach to learn how to use BeautifulSoup is by having it applied to process HTML-based information.

For illustration purposes let’s assume we have harvested from the web the following HTML:

html_doc = """<html><head><title>A Bedtime Story</title></head>

<body>

<p class="title">The Dormouse's story</p>

<p class="story">Once upon a time there were three little sisters; and their names were

Elsie, Lacie and Tillie and they lived at the bottom of a well.</p>

<p class="copyright"><a href="https://creativecommons.org/share-your-work/cclicenses/">Creative Commons</a></p>

</body>

"""Running the “three sisters” document through Beautiful Soup gives us a BeautifulSoup object, which represents the document as a nested data structure:

from bs4 import BeautifulSoup

soup = BeautifulSoup(html_doc, 'html.parser')

print(soup.prettify())<html>

<head>

<title>

A Bedtime Story

</title>

</head>

<body>

<p class="title">

The Dormouse's story

</p>

<p class="story">

Once upon a time there were three little sisters; and their names were

Elsie, Lacie and Tillie and they lived at the bottom of a well.

</p>

<p class="copyright">

<a href="https://creativecommons.org/share-your-work/cclicenses/">

Creative Commons

</a>

</p>

</body>

</html>

Once we have the HTML document processed by BeautifulSoup we can refer to the document structure and access the data we need, for instance we can access the content of the head and body sections by:

# Extract the head section

print("Content of the head Section:", soup.head)

print("Title in the head section:", soup.head.title.text)

# Extract the body section

print("Content of the body section:\n",soup.body)Content of the head Section: <head><title>A Bedtime Story</title></head>

Title in the head section: A Bedtime Story

Content of the body section:

<body>

<p class="title">The Dormouse's story</p>

<p class="story">Once upon a time there were three little sisters; and their names were

Elsie, Lacie and Tillie and they lived at the bottom of a well.</p>

<p class="copyright"><a href="https://creativecommons.org/share-your-work/cclicenses/">Creative Commons</a></p>

</body>In HTML most sections contain several tags. Beautiful provides the find_all() method. This method when applied to a specific section (e.g. body) returns a list with all the tags, for instance:

soup.body.find_all()[<p class="title">The Dormouse's story</p>,

<p class="story">Once upon a time there were three little sisters; and their names were

Elsie, Lacie and Tillie and they lived at the bottom of a well.</p>,

<p class="copyright"><a href="https://creativecommons.org/share-your-work/cclicenses/">Creative Commons</a></p>,

<a href="https://creativecommons.org/share-your-work/cclicenses/">Creative Commons</a>]soup.find_all("p", "story")[<p class="story">Once upon a time there were three little sisters; and their names were

Elsie, Lacie and Tillie and they lived at the bottom of a well.</p>]soup.find_all("p", "copyright")[<p class="copyright"><a href="https://creativecommons.org/share-your-work/cclicenses/">Creative Commons</a></p>]Given that find_all() returns a list that can be further processed as illustrated in the following example:

[x.text for x in soup.body.find_all('p')]["The Dormouse's story",

'Once upon a time there were three little sisters; and their names were\nElsie, Lacie and Tillie and they lived at the bottom of a well.',

'Creative Commons']The following table provides a list of available methods you can use to process HTML documents using BeautifulSoup

| Method | Purpose | Example Usage |

|---|---|---|

soup.find() |

Finds the first occurrence of a tag that matches criteria | soup.find('p', class_='story') |

soup.find_all() |

Finds all occurrences of a tag that match criteria | soup.find_all('a') |

tag.get_text() |

Extracts text content of a tag, including nested tags | soup.find('p', class_='story').get_text() |

tag.text |

Shortcut to .get_text() for text extraction |

soup.find('title').text |

soup.select() |

Finds elements using CSS selectors | soup.select('p.story a') |

soup.select_one() |

Finds the first element matching a CSS selector | soup.select_one('p.story a') |

tag['attribute'] |

Accesses a specific attribute of a tag | soup.find('a')['href'] |

tag.get('attribute') |

Retrieves a tag’s attribute safely (returns None if not found) |

soup.find('a').get('class') |

soup.contents |

Retrieves a list of direct children of a tag | soup.find('p').contents |

soup.children |

An iterator for direct child tags only | list(soup.find('p').children) |

soup.descendants |

An iterator for all nested children | list(soup.find('p').descendants) |

soup.parent |

Accesses the parent tag of a tag | soup.find('a').parent |

soup.parents |

Iterates over all ancestor tags | list(soup.find('a').parents) |

soup.next_sibling |

Accesses the next sibling of a tag | soup.find('a').next_sibling |

soup.previous_sibling |

Accesses the previous sibling of a tag | soup.find('a').previous_sibling |

soup.next_element |

Accesses the next element in the HTML document | soup.find('title').next_element |

soup.previous_element |

Accesses the previous element in the HTML document | soup.find('body').previous_element |

soup.decompose() |

Removes a tag and its contents from the document | tag = soup.find('p'); tag.decompose() |

soup.replace_with() |

Replaces a tag with new content | soup.find('p').replace_with(BeautifulSoup('<div>New content</div>', 'html.parser')) |

soup.prettify() |

Outputs the HTML document with formatted indentation | print(soup.prettify()) |

18.5 Web Scraping with BeautifulSoup

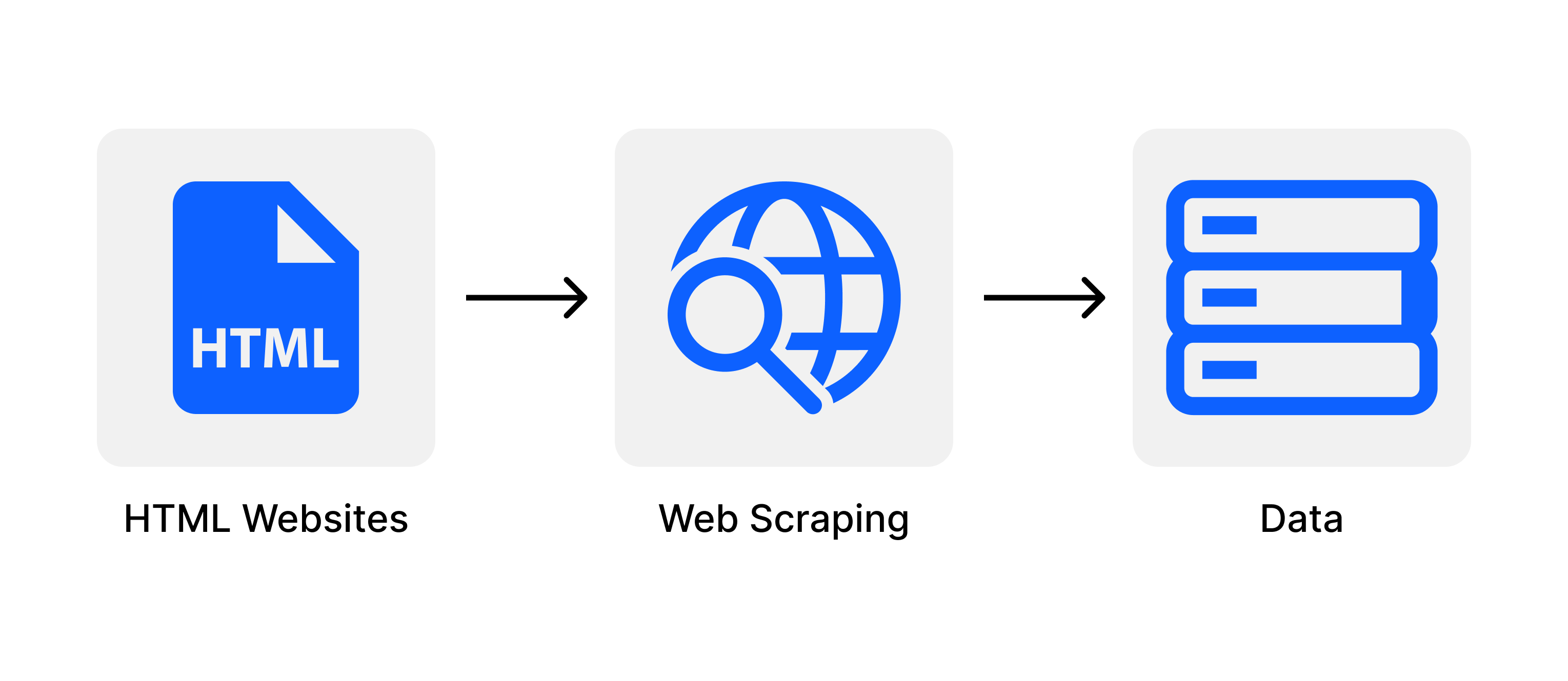

As illustrated in the following figure web scraping is the process of extracting data from websites by automatically retrieving, parsing, and storing information. This technique enables you to collect data from a wide range of web pages for analysis or use in applications without manual copy-pasting. Important use cases in data science and machine learning involve the retrieval of content, such as text, images, product prices, reviews, or even news articles. Generative AI based systems, such as chatGPT, have been developed using vast amounts of information harvested from the internet.

Relevant use cases involving web scraping are:

Market Research and Competitive Analysis:Web scraping is commonly used for market research, as it allows businesses to track market trends, gather customer reviews, and analyze competitor activity. This kind of data helps companies refine their products, services, and marketing approaches to stay competitive.

Content Aggregation: Many websites and applications aggregate content from multiple sources, such as job listings, news updates, or real estate data. Web scraping facilitates gathering and organizing this data, offering users a centralized location to access information.

Machine Learning and Data Science: High-quality datasets are essential for machine learning and data science projects. When there are no available or sufficient open datasets, web scraping can help collect the data needed to train and test models.

News and Sentiment Analysis: Analysts and researchers can scrape news websites, social media, or forums to monitor public sentiment and trending topics, especially important in finance, politics, and media. By tracking how sentiments change over time, they can make informed predictions or provide timely insights.

Real Estate and Financial Services: Real estate platforms can use web scraping to track property prices, listings, or trends across locations. Similarly, financial services firms may use scraping to gather stock data, company news, or economic indicators for investment analysis.

Web scraping in Python can be done using libraries such as BeautifulSoup, Requests, Scrapy and Selenium. In this book we will refer only to BeautifulSoup, more complex use cases (e.g. dynamic content generation) might require Selenium.

Example

Let’s assume that you need to scrape book information from the following web page Books to Scrape to extract book details such as titles, prices and ratings.

To accomplish this task you need two Python libraries: Requests and BeautifulSoup. You need the former to retrieve the HTML content and the latter to extract the information that you need.

import requests

from bs4 import BeautifulSoup

url = 'http://books.toscrape.com/'

response = requests.get(url)

if response.status_code == 200:

print("Successfully fetched the page!")

page_content = response.text

else:

print("Failed to retrieve the page")Successfully fetched the page!Now that you have retrieved the page, you need to have it processed by BeautifulSoup as follows:

soup = BeautifulSoup(page_content, 'html.parser')By inspecting the HTML (you need a web browser for this) of the page we know that:

- Each book is inside a

<article class='product_pod'>tag. - Title is found within an

<h3>tag. - Price is inside a

<p class='price_color>tag. - Rating is encoded as a class in

<p class="star-rating">with values like “star-rating Three” for a 3-star rating.

The following code allows you to fetch the needed information:

books = []

for book in soup.find_all('article', class_='product_pod'):

# Title

title = book.h3.a['title']

# Price

price = book.find('p', class_='price_color').text

# Rating

rating_class = book.find('p', class_='star-rating')['class']

rating = rating_class[1] # The second class is the rating (e.g., "Three")

# Append book info to the list

books.append({'Title': title, 'Price': price, 'Rating': rating})

# Print results

for book in books:

print(book){'Title': 'A Light in the Attic', 'Price': '£51.77', 'Rating': 'Three'}

{'Title': 'Tipping the Velvet', 'Price': '£53.74', 'Rating': 'One'}

{'Title': 'Soumission', 'Price': '£50.10', 'Rating': 'One'}

{'Title': 'Sharp Objects', 'Price': '£47.82', 'Rating': 'Four'}

{'Title': 'Sapiens: A Brief History of Humankind', 'Price': '£54.23', 'Rating': 'Five'}

{'Title': 'The Requiem Red', 'Price': '£22.65', 'Rating': 'One'}

{'Title': 'The Dirty Little Secrets of Getting Your Dream Job', 'Price': '£33.34', 'Rating': 'Four'}

{'Title': 'The Coming Woman: A Novel Based on the Life of the Infamous Feminist, Victoria Woodhull', 'Price': '£17.93', 'Rating': 'Three'}

{'Title': 'The Boys in the Boat: Nine Americans and Their Epic Quest for Gold at the 1936 Berlin Olympics', 'Price': '£22.60', 'Rating': 'Four'}

{'Title': 'The Black Maria', 'Price': '£52.15', 'Rating': 'One'}

{'Title': 'Starving Hearts (Triangular Trade Trilogy, #1)', 'Price': '£13.99', 'Rating': 'Two'}

{'Title': "Shakespeare's Sonnets", 'Price': '£20.66', 'Rating': 'Four'}

{'Title': 'Set Me Free', 'Price': '£17.46', 'Rating': 'Five'}

{'Title': "Scott Pilgrim's Precious Little Life (Scott Pilgrim #1)", 'Price': '£52.29', 'Rating': 'Five'}

{'Title': 'Rip it Up and Start Again', 'Price': '£35.02', 'Rating': 'Five'}

{'Title': 'Our Band Could Be Your Life: Scenes from the American Indie Underground, 1981-1991', 'Price': '£57.25', 'Rating': 'Three'}

{'Title': 'Olio', 'Price': '£23.88', 'Rating': 'One'}

{'Title': 'Mesaerion: The Best Science Fiction Stories 1800-1849', 'Price': '£37.59', 'Rating': 'One'}

{'Title': 'Libertarianism for Beginners', 'Price': '£51.33', 'Rating': 'Two'}

{'Title': "It's Only the Himalayas", 'Price': '£45.17', 'Rating': 'Two'}18.6 Text Manipulation in Practice

First Example (Digital Marketing)

The following Jupyter Notebook illustrates how to manipulate textual information from a retail dataset using generative AI and Pandas native methods.

Second Example (Healthcare)

The following notebook Jupyter Notebook illustrates how to perform text manipulations over a healthcare dataset.

18.7 Conclusions

String and text manipulation are essential skills in data science, particularly as unstructured textual data becomes increasingly prevalent. Textual data sources like emails, social media posts, customer reviews, and logs are rich in insights but require significant preprocessing to be usable for analysis.

Python offers robust tools for string manipulation, including built-in methods, f-strings for formatting, and libraries like Pandas and BeautifulSoup for handling large datasets or web scraping. Regular expressions add versatility, enabling complex pattern matching and text extraction. Advanced techniques powered by generative AI further enhance capabilities, supporting applications like summarisation, sentiment analysis, and content generation. These methods will allow you to unlock insights from text, develop predictive models, and communicate findings effectively through visualizations or actionable summaries.

In the next chapter you will learn how to process JSON-based textual information.

18.8 Further Readings

For those of you in need of additional, more advanced, topics please refer to the following references:

If you want a good reference site: Official Pandas Text Manipulation Reference

BeautifulSoup Documentation

OpenAI Documentation