import pandas as pd

# Create a Series

s = pd.Series([1, 3, 5, 7, 9])

s0 1

1 3

2 5

3 7

4 9

dtype: int64Pandas is a powerful, open-source library for data manipulation and analysis in Python. It’s widely used in the field of data science because it provides essential data structures and functions that make working with structured data easy and efficient.

Think of Pandas as a toolkit that allows you to handle, manipulate, and analyse data in a way that’s both intuitive and high-performing.

Pandas is important in data science for several reasons:

Data Cleaning and Preparation: Data scientists often work with real-world data, which can be messy. Pandas provides functions to easily handle missing values, filter out unnecessary information, and format data into a more useful structure. This step is crucial since clean data leads to more accurate analysis.

Data Analysis: Pandas allows you to perform powerful data analysis quickly. You can compute summary statistics, filter data based on conditions, and perform aggregations efficiently. This is very useful for exploratory data analysis (EDA), where you seek to understand the underlying patterns in your data.

Manipulating and Reshaping Data: It offers robust functionality for transforming data, such as merging, concatenating, and reshaping datasets. This is essential when trying to combine multiple data sources or rearranging data to fit analysis needs.

Integration with Other Libraries: Pandas works seamlessly with other libraries like NumPy (for numerical computations), Matplotlib (for visualization), and Scikit-learn (for machine learning). This interoperability makes it a foundational tool in the data science ecosystem.

Performance: Despite being a high-level library, Pandas is optimised for performance. It leverages efficient data structures based on NumPy arrays, making operations on large datasets faster than you might expect.

Ease of Use:The syntax and functions in Pandas are designed for usability. Even if you are new to programming or data analysis, you can perform complex data manipulations with just a few lines of code. This makes it accessible for beginners and useful for experienced data professionals alike.

For instance Pandas can be used in the following use cases:

Analysing Sales Data: Imagine you have a CSV file containing sales data with columns for product, price, and quantity sold. With Pandas, you can easily read the file, clean the data (like removing NaN values), filter products above a certain price, and compute total sales.

Weather Data Analysis: If you’re analysing climate data over several years, you can use Pandas to read the datasets, aggregate monthly averages, and visualise trends.

Customer Data Management: When managing customer information for a business, Pandas can help you analyse customer behaviour, segment users, and predict trends based on historical data.

The two primary data structures in Pandas are:

Series: A one-dimensional labelled array that can hold any data type (integers, floats, strings, etc.). You can think of it as a single column from a spreadsheet. The axis labels are collectively referred to as the index.

import pandas as pd

# Create a Series

s = pd.Series([1, 3, 5, 7, 9])

s0 1

1 3

2 5

3 7

4 9

dtype: int64DataFrame: A two-dimensional labelled data structure with columns of potentially different types. It’s similar to a spreadsheet or SQL table, making it very easy to visualise and understand. A DataFrame also has an index.

# Create a DataFrame

import pandas as pd

data = {

'Name': ['Bob', 'Alice', 'Charlie'],

'Age': [25, 35, 30],

'City': ['New York', 'Los Angeles', 'Chicago']

}

df = pd.DataFrame(data)

df| Name | Age | City | |

|---|---|---|---|

| 0 | Bob | 25 | New York |

| 1 | Alice | 35 | Los Angeles |

| 2 | Charlie | 30 | Chicago |

In Pandas, basic operations over DataFrames include several essential tasks for data manipulation and analysis. First, you can create a DataFrame from various data sources, such as dictionaries or CSV files. Inspecting the DataFrame can be done using methods like head(), tail(), info(), and describe() to understand its structure and summary statistics. You can then select specific data by accessing columns with bracket notation or using methods like loc and iloc for row and column selection. Filtering allows you to retrieve rows based on conditions, complemented by the ability to add or modify columns easily. For data cleaning, removing columns or rows can be done using the drop() method. You can sort data using the sort_values() method and group data with groupby() to perform aggregation operations. Merging and joining multiple DataFrames can be achieved using join(), merge() or concat(), and finally, you can save your DataFrame to various file formats, such as CSV or Excel, using methods like to_csv(). Together, these operations form the backbone of data manipulation in Pandas.

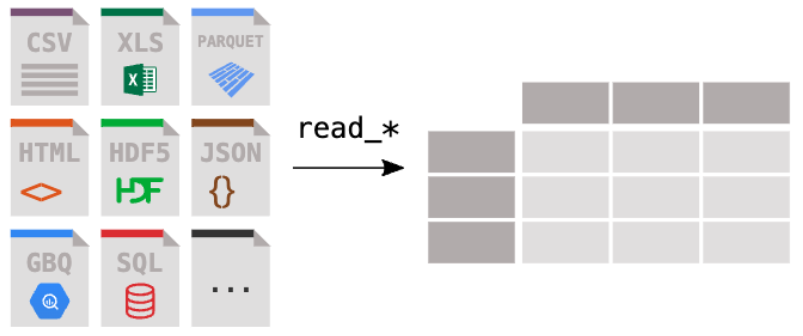

Usually you will be creating DataFrames by importing existing datasets, Pandas offers several methods to import external datasets, refer to the following figure.

For instance, to import CSV files we use pandas.read_csv(). In the example following we access a URL "https://download.mlcc.google.com/mledu-datasets/" to download a CSV file named california_housing_train.csv and have it converted into a Pandas DataFrame called new_dataframe.

# Create a new DataFrame by importing an external CSV file

import pandas as pd

new_dataframe = pd.read_csv("https://download.mlcc.google.com/mledu-datasets/california_housing_train.csv", sep=",")

new_dataframe| longitude | latitude | housing_median_age | total_rooms | total_bedrooms | population | households | median_income | median_house_value | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | -114.31 | 34.19 | 15.0 | 5612.0 | 1283.0 | 1015.0 | 472.0 | 1.4936 | 66900.0 |

| 1 | -114.47 | 34.40 | 19.0 | 7650.0 | 1901.0 | 1129.0 | 463.0 | 1.8200 | 80100.0 |

| 2 | -114.56 | 33.69 | 17.0 | 720.0 | 174.0 | 333.0 | 117.0 | 1.6509 | 85700.0 |

| 3 | -114.57 | 33.64 | 14.0 | 1501.0 | 337.0 | 515.0 | 226.0 | 3.1917 | 73400.0 |

| 4 | -114.57 | 33.57 | 20.0 | 1454.0 | 326.0 | 624.0 | 262.0 | 1.9250 | 65500.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 16995 | -124.26 | 40.58 | 52.0 | 2217.0 | 394.0 | 907.0 | 369.0 | 2.3571 | 111400.0 |

| 16996 | -124.27 | 40.69 | 36.0 | 2349.0 | 528.0 | 1194.0 | 465.0 | 2.5179 | 79000.0 |

| 16997 | -124.30 | 41.84 | 17.0 | 2677.0 | 531.0 | 1244.0 | 456.0 | 3.0313 | 103600.0 |

| 16998 | -124.30 | 41.80 | 19.0 | 2672.0 | 552.0 | 1298.0 | 478.0 | 1.9797 | 85800.0 |

| 16999 | -124.35 | 40.54 | 52.0 | 1820.0 | 300.0 | 806.0 | 270.0 | 3.0147 | 94600.0 |

17000 rows × 9 columns

To import XLS or JSON files we would use pandas.read_excel() and pandas.read_json() respectively. Refer to Pandas IO methods for additional methods to import external datasets.

Indeed DataFrames can be created from scratch using the pandas.DataFrame() method. The following example shows how to create a new DataFrame from a Dictionary.

# Step 1 import pandas

import pandas as pd

# Step 2: Define the dictionary

data = {

'Name': ['Alice', 'Bob', 'Charlie','John','Sean','Tobias'], # Column 'Name' with names

'Age': [25, 30, 35,50,47,59], # Column 'Age' with ages

'City': ['New York', 'Los Angeles', 'Chicago','Hong Kong','London','Beijing'], # Column 'City' with cities

'Salary': [70000, 80000, 60000,45000,125000,36000] # Column 'Salary' with salaries

}

# Step 3: Create the DataFrame

df = pd.DataFrame(data)

# Display the DataFrame

df| Name | Age | City | Salary | |

|---|---|---|---|---|

| 0 | Alice | 25 | New York | 70000 |

| 1 | Bob | 30 | Los Angeles | 80000 |

| 2 | Charlie | 35 | Chicago | 60000 |

| 3 | John | 50 | Hong Kong | 45000 |

| 4 | Sean | 47 | London | 125000 |

| 5 | Tobias | 59 | Beijing | 36000 |

You will notice that the DataFrame df has an additional column at the beginning that runs from zero to five. This is called the index of the DataFrame and provides a convenient mechanism to name each row automatically.

Pandas offers useful methods to inspect existing DataFrames. This is useful to conduct exploratory data analysis (EDA) and gain a preliminary understanding of the dataset structure.

To have a look at the first rows of a DataFrame we use the pandas.head() method

df.head() # Displays the first 5 rows by default| Name | Age | City | Salary | |

|---|---|---|---|---|

| 0 | Alice | 25 | New York | 70000 |

| 1 | Bob | 30 | Los Angeles | 80000 |

| 2 | Charlie | 35 | Chicago | 60000 |

| 3 | John | 50 | Hong Kong | 45000 |

| 4 | Sean | 47 | London | 125000 |

To have a look at the last rows of a DataFrame we use the pandas.tail() method

df.tail() # Displays the last 5 rows by default| Name | Age | City | Salary | |

|---|---|---|---|---|

| 1 | Bob | 30 | Los Angeles | 80000 |

| 2 | Charlie | 35 | Chicago | 60000 |

| 3 | John | 50 | Hong Kong | 45000 |

| 4 | Sean | 47 | London | 125000 |

| 5 | Tobias | 59 | Beijing | 36000 |

To have a look at a random sample of rows of a DataFrame we use the pandas.sample() method

df.sample(n=2) # Displays a random sample of 2 rows| Name | Age | City | Salary | |

|---|---|---|---|---|

| 2 | Charlie | 35 | Chicago | 60000 |

| 5 | Tobias | 59 | Beijing | 36000 |

To get a summary of a DataFrame we use the pandas.info() method .

df.info() # Displays a summary of the DataFrame<class 'pandas.core.frame.DataFrame'>

RangeIndex: 6 entries, 0 to 5

Data columns (total 4 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Name 6 non-null object

1 Age 6 non-null int64

2 City 6 non-null object

3 Salary 6 non-null int64

dtypes: int64(2), object(2)

memory usage: 324.0+ bytesBased on the previous information we learn that the df DataFrame has 4 columns: ‘Name’, ‘Age’, ‘City’ and ‘Salary’. ‘Name’ and ‘City’ are objects, ‘Age’ and ‘Salary’ are int64 (this is integers with a precision of 64 bits). the DataFrame has 3 rows and requires 224 bytes of memory. It also has an index consisting of 6 elements [0-5].

To get a basic statistical description of a DataFrame we use the pandas.describe() method .

df.describe() # Displays a basic statistical description of numerical columns in the DataFrame| Age | Salary | |

|---|---|---|

| count | 6.000000 | 6.000000 |

| mean | 41.000000 | 69333.333333 |

| std | 13.069047 | 31633.315771 |

| min | 25.000000 | 36000.000000 |

| 25% | 31.250000 | 48750.000000 |

| 50% | 41.000000 | 65000.000000 |

| 75% | 49.250000 | 77500.000000 |

| max | 59.000000 | 125000.000000 |

By default Pandas associates a numerical index by default, it is possible however to specify your own index when creating new DataFrames, as illustrated in the following two examples:

california_dataframe = pd.read_csv("https://download.mlcc.google.com/mledu-datasets/california_housing_train.csv", sep=",",index_col='population')

california_dataframe| longitude | latitude | housing_median_age | total_rooms | total_bedrooms | households | median_income | median_house_value | |

|---|---|---|---|---|---|---|---|---|

| population | ||||||||

| 1015.0 | -114.31 | 34.19 | 15.0 | 5612.0 | 1283.0 | 472.0 | 1.4936 | 66900.0 |

| 1129.0 | -114.47 | 34.40 | 19.0 | 7650.0 | 1901.0 | 463.0 | 1.8200 | 80100.0 |

| 333.0 | -114.56 | 33.69 | 17.0 | 720.0 | 174.0 | 117.0 | 1.6509 | 85700.0 |

| 515.0 | -114.57 | 33.64 | 14.0 | 1501.0 | 337.0 | 226.0 | 3.1917 | 73400.0 |

| 624.0 | -114.57 | 33.57 | 20.0 | 1454.0 | 326.0 | 262.0 | 1.9250 | 65500.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 907.0 | -124.26 | 40.58 | 52.0 | 2217.0 | 394.0 | 369.0 | 2.3571 | 111400.0 |

| 1194.0 | -124.27 | 40.69 | 36.0 | 2349.0 | 528.0 | 465.0 | 2.5179 | 79000.0 |

| 1244.0 | -124.30 | 41.84 | 17.0 | 2677.0 | 531.0 | 456.0 | 3.0313 | 103600.0 |

| 1298.0 | -124.30 | 41.80 | 19.0 | 2672.0 | 552.0 | 478.0 | 1.9797 | 85800.0 |

| 806.0 | -124.35 | 40.54 | 52.0 | 1820.0 | 300.0 | 270.0 | 3.0147 | 94600.0 |

17000 rows × 8 columns

# Create a DataFrame

import pandas as pd

data = {

'Name': ['Bob', 'Alice', 'Charlie'],

'Age': [25, 35, 30],

'City': ['New York', 'Los Angeles', 'Chicago'],

'Salary': [30000, 80000, 60000]

}

labels=['Employee_001','Employee_002','Employee_003']

df2 = pd.DataFrame(data,index=labels)

df2| Name | Age | City | Salary | |

|---|---|---|---|---|

| Employee_001 | Bob | 25 | New York | 30000 |

| Employee_002 | Alice | 35 | Los Angeles | 80000 |

| Employee_003 | Charlie | 30 | Chicago | 60000 |

The index of an existing DataFrame can be manipulated via DataFrame.set_index() and DataFrame.reset_index(), for instance the following code resets the existing index creating a new one.

df2_new=df2.reset_index()

df2_new| index | Name | Age | City | Salary | |

|---|---|---|---|---|---|

| 0 | Employee_001 | Bob | 25 | New York | 30000 |

| 1 | Employee_002 | Alice | 35 | Los Angeles | 80000 |

| 2 | Employee_003 | Charlie | 30 | Chicago | 60000 |

You can use the option drop=True parameter to avoid the old index being added as a column:

df2_new=df2.reset_index(drop=True)

df2_new| Name | Age | City | Salary | |

|---|---|---|---|---|

| 0 | Bob | 25 | New York | 30000 |

| 1 | Alice | 35 | Los Angeles | 80000 |

| 2 | Charlie | 30 | Chicago | 60000 |

Even better you can use the option inplace=True to apply the changes in the original dataframe as follows:

df2.reset_index(inplace=True,drop=True)

df2| Name | Age | City | Salary | |

|---|---|---|---|---|

| 0 | Bob | 25 | New York | 30000 |

| 1 | Alice | 35 | Los Angeles | 80000 |

| 2 | Charlie | 30 | Chicago | 60000 |

Given an existing DataFrame you can set an existing column as the new index with DataFrame.set_index(), for instance:

df2_new=df2.set_index('City')

df2_new| Name | Age | Salary | |

|---|---|---|---|

| City | |||

| New York | Bob | 25 | 30000 |

| Los Angeles | Alice | 35 | 80000 |

| Chicago | Charlie | 30 | 60000 |

Again you can use the option inplace=True to apply the changes to the existing dataframe:

df2.set_index('City',inplace=True)

df2| Name | Age | Salary | |

|---|---|---|---|

| City | |||

| New York | Bob | 25 | 30000 |

| Los Angeles | Alice | 35 | 80000 |

| Chicago | Charlie | 30 | 60000 |

Two useful methods are DataFrame.sort_values() and DataFrame.sort_index() as they allow you to sort DataFrames by columns or by index.

The following examples order the df2 DataFrame by the column Name in ascending and descending order respectively.

df2.sort_values(by='Name',inplace=True)

df2| Name | Age | Salary | |

|---|---|---|---|

| City | |||

| Los Angeles | Alice | 35 | 80000 |

| New York | Bob | 25 | 30000 |

| Chicago | Charlie | 30 | 60000 |

df2.sort_values(by='Salary',inplace=True, ascending=False)

df2| Name | Age | Salary | |

|---|---|---|---|

| City | |||

| Los Angeles | Alice | 35 | 80000 |

| Chicago | Charlie | 30 | 60000 |

| New York | Bob | 25 | 30000 |

It is also possible to sort DataFrames by index, for instance:

df2.sort_index(inplace=True)

df2| Name | Age | Salary | |

|---|---|---|---|

| City | |||

| Chicago | Charlie | 30 | 60000 |

| Los Angeles | Alice | 35 | 80000 |

| New York | Bob | 25 | 30000 |

Pandas offers several methods to select columns from existing DataFrames. For instance, to select a single column we use the indexing operator [] with the name of the column we are interested in.

ages = df2['Age']

agesCity

Chicago 30

Los Angeles 35

New York 25

Name: Age, dtype: int64To select several columns we use the indexing operator [] with a list of the names of the columns we want to select.

names_and_ages = df2[['Name','Age']]

names_and_ages| Name | Age | |

|---|---|---|

| City | ||

| Chicago | Charlie | 30 |

| Los Angeles | Alice | 35 |

| New York | Bob | 25 |

Pandas offers several methods to select rows from an existing DataFrame. For instance to select a single row we use the indexing operator DataFrame.loc[] with the name of the row we want to retrieve. Remember that the index provides a naming for each row. For instance the following example retrieves the row with the name ‘Chicago’ frim the DataFrame df2.

row_named_Chicago = df2.loc['Chicago']

row_named_ChicagoName Charlie

Age 30

Salary 60000

Name: Chicago, dtype: objectTo select several rows by name we use the indexing operator DataFrame.loc[] this time with a list of names of the rows we want to retrieve. The following example retrieves rows with names ‘1’ and ‘5’.

rows_named_Chicago_NewYork = df2.loc[['Chicago','New York']]

rows_named_Chicago_NewYork| Name | Age | Salary | |

|---|---|---|---|

| City | |||

| Chicago | Charlie | 30 | 60000 |

| New York | Bob | 25 | 30000 |

In future chapters we will see other uses of the operator df.loc[], for instance to filter rows based on conditions.

Pandas offers many numerical operations that allow you to perform calculations on DataFrames efficiently. For instance:

df2['Age'].mean()30.0df2['Salary'].max()80000df2['Salary']/1000000City

Chicago 0.06

Los Angeles 0.08

New York 0.03

Name: Salary, dtype: float64df2['Salary']/df2['Age']City

Chicago 2000.000000

Los Angeles 2285.714286

New York 1200.000000

dtype: float64df2['Salary']/df2['Salary'].min()City

Chicago 2.000000

Los Angeles 2.666667

New York 1.000000

Name: Salary, dtype: float64The following table lists some common numerical operations available in pandas:

| Operation | Description | Example Code |

|---|---|---|

| Addition | Adds a value to each element or adds two DataFrames. | df + 10 or df + other_df |

| Subtraction | Subtracts a value from each element or subtracts two DataFrames. | df - 5 or df - other_df |

| Multiplication | Multiplies each element by a value or multiplies two DataFrames element-wise. | df * 2 or df * other_df |

| Division | Divides each element by a value or divides two DataFrames element-wise. | df / 3 or df / other_df |

| Sum | Computes the sum for each column (or row with axis=1). |

df.sum() |

| Mean | Computes the mean of each column (or row with axis=1). |

df.mean() |

| Median | Computes the median of each column (or row with axis=1). |

df.median() |

| Min/Max | Computes the minimum or maximum of each column (or row with axis=1). |

df.min(), df.max() |

| Standard Deviation | Computes the standard deviation of each column (or row with axis=1). |

df.std() |

| Variance | Computes the variance of each column (or row with axis=1). |

df.var() |

| Cumulative Sum | Computes the cumulative sum of each column (or row with axis=1). |

df.cumsum() |

| Cumulative Product | Computes the cumulative product of each column (or row with axis=1). |

df.cumprod() |

| Difference | Computes the difference between consecutive elements in each column (or row with axis=1). |

df.diff() |

| Correlation | Computes the pairwise correlation between columns. | df.corr() |

| Covariance | Computes the pairwise covariance between columns. | df.cov() |

| Rank | Assigns ranks to values in each column (or row with axis=1). |

df.rank() |

| Quantile | Returns the specified quantile for each column. | df.quantile(0.5) |

| Rounding | Rounds values to the specified number of decimal places. | df.round(2) |

| Absolute Value | Takes the absolute value of each element. | df.abs() |

| Exponential | Applies the exponential function to each element. | df.exp() |

| Standardization (Z-score) | Scales values to have a mean of 0 and standard deviation of 1. | (df - df.mean()) / df.std() |

| Min-Max Scaling | Scales values between 0 and 1. | (df - df.min()) / (df.max() - df.min()) |

Following please find some examples of python code. Try to understand what the code is trying to accomplish before checking the solution below:

import pandas as pd

# URL of the Titanic dataset

url = 'https://raw.githubusercontent.com/thousandoaks/Python4DS-I/refs/heads/main/datasets/Titanic-Dataset.csv'

# Load the dataset into a DataFrame

df = pd.read_csv(url)

# Display the first few rows of the DataFrame

df.head()This code loads the Titanic dataset from the given URL into a Pandas DataFrame. To do so it stores the dataset URL in a variable and use the pd.read_csv() function to read the CSV file directly from the web into a DataFrame named df. Finally, to verify the successful data loading, display the first few rows using the df.head() function.

| PassengerId | Survived | Pclass | Name | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 3 | Braund, Mr. Owen Harris | male | 22.0 | 1 | 0 | A/5 21171 | 7.2500 | NaN | S |

| 1 | 2 | 1 | 1 | Cumings, Mrs. John Bradley (Florence Briggs Th... | female | 38.0 | 1 | 0 | PC 17599 | 71.2833 | C85 | C |

| 2 | 3 | 1 | 3 | Heikkinen, Miss. Laina | female | 26.0 | 0 | 0 | STON/O2. 3101282 | 7.9250 | NaN | S |

| 3 | 4 | 1 | 1 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | female | 35.0 | 1 | 0 | 113803 | 53.1000 | C123 | S |

| 4 | 5 | 0 | 3 | Allen, Mr. William Henry | male | 35.0 | 0 | 0 | 373450 | 8.0500 | NaN | S |

# Set the 'Name' column as the new index

df.set_index('Name', inplace=True)

# Display the DataFrame with the new index

df.head()To set a specific column as the new index in the DataFrame df, the set_index() function is used with the column name, such as Name, as its argument. By including the inplace=True parameter, the operation is performed directly on the existing DataFrame without the need to assign it to a new variable. This effectively replaces the default integer index with the values from the specified column. Finally, calling df.head() allows verification by displaying the first few rows of the updated DataFrame. This method is useful for better data organization and efficient lookups based on meaningful identifiers.

| PassengerId | Survived | Pclass | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Name | |||||||||||

| Braund, Mr. Owen Harris | 1 | 0 | 3 | male | 22.0 | 1 | 0 | A/5 21171 | 7.2500 | NaN | S |

| Cumings, Mrs. John Bradley (Florence Briggs Thayer) | 2 | 1 | 1 | female | 38.0 | 1 | 0 | PC 17599 | 71.2833 | C85 | C |

| Heikkinen, Miss. Laina | 3 | 1 | 3 | female | 26.0 | 0 | 0 | STON/O2. 3101282 | 7.9250 | NaN | S |

| Futrelle, Mrs. Jacques Heath (Lily May Peel) | 4 | 1 | 1 | female | 35.0 | 1 | 0 | 113803 | 53.1000 | C123 | S |

| Allen, Mr. William Henry | 5 | 0 | 3 | male | 35.0 | 0 | 0 | 373450 | 8.0500 | NaN | S |

# Sort the DataFrame by the 'Fare' column in descending order

df_sorted = df.sort_values(by='Fare', ascending=False)

# Display the first few rows of the sorted DataFrame

df_sorted.head()To sort the DataFrame df based on the values in the Fare column in descending order, the sort_values() function is used with the parameter by=‘Fare’ to specify the column to be sorted. The argument ascending=False ensures that the sorting is done in descending order, meaning the highest fares will appear at the top. The sorted DataFrame is then stored in df_sorted without modifying the original df. Finally, calling df_sorted.head() prints the first few rows of the sorted DataFrame to verify the changes. This approach is useful when analysing data to identify trends based on fare prices.

| PassengerId | Survived | Pclass | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Name | |||||||||||

| Ward, Miss. Anna | 259 | 1 | 1 | female | 35.0 | 0 | 0 | PC 17755 | 512.3292 | NaN | C |

| Lesurer, Mr. Gustave J | 738 | 1 | 1 | male | 35.0 | 0 | 0 | PC 17755 | 512.3292 | B101 | C |

| Cardeza, Mr. Thomas Drake Martinez | 680 | 1 | 1 | male | 36.0 | 0 | 1 | PC 17755 | 512.3292 | B51 B53 B55 | C |

| Fortune, Miss. Mabel Helen | 89 | 1 | 1 | female | 23.0 | 3 | 2 | 19950 | 263.0000 | C23 C25 C27 | S |

| Fortune, Mr. Charles Alexander | 28 | 0 | 1 | male | 19.0 | 3 | 2 | 19950 | 263.0000 | C23 C25 C27 | S |

# Calculate the average (mean), max, and min of the 'Age' column

average_age = df['Age'].mean()

max_age = df['Age'].max()

min_age = df['Age'].min()

# Print the results

print("Average Age: ", average_age)

print("Maximum Age: ", max_age)

print("Minimum Age: ", min_age)This code snippet calculates the statistical measures of the Age column in the DataFrame df by using Pandas’ built-in functions. The mean() function computes the average age, max() finds the highest age, and min() determines the lowest age. The results are then printed in a formatted manner to two decimal places for the average age. This approach is useful for summarising numerical data in the dataset efficiently.

Average Age: 29.69911764705882

Maximum Age: 80.0

Minimum Age: 0.42# Combine 'Sex' and 'Ticket' columns into a new column called 'Gender_Ticket'

df[Gender_Ticket'] = df['Sex'] + '_' + df['Ticket']

# Display the first few rows to check the new column

df[['Sex', 'Ticket', 'Gender_Ticket']].head()This code creates a new column named Gender_Ticket by concatenating the values from the Sex and Ticket columns, separating them with an underscore _. The resulting DataFrame is printed to verify the new column. This approach is useful for creating combined identifiers or grouping keys in the dataset.

| Sex | Ticket | Gender_Ticket | |

|---|---|---|---|

| Name | |||

| Braund, Mr. Owen Harris | male | A/5 21171 | male_A/5 21171 |

| Cumings, Mrs. John Bradley (Florence Briggs Thayer) | female | PC 17599 | female_PC 17599 |

| Heikkinen, Miss. Laina | female | STON/O2. 3101282 | female_STON/O2. 3101282 |

| Futrelle, Mrs. Jacques Heath (Lily May Peel) | female | 113803 | female_113803 |

| Allen, Mr. William Henry | male | 373450 | male_373450 |

The best approach to master Pandas is by using it in your data science endeavours. Remember the motto: “Practice makes perfect”.

The following Jupyter Notebook illustrates Pandas’ core functionalities for data manipulation and analysis. It covers essential operations like loading data from CSV files into a DataFrame, accessing specific rows and columns, and selecting subsets of data based on their names or positions.

The following Jupyter Notebook provides a basic introduction to the pandas library in Python, focusing on data manipulation and analysis using the Titanic dataset. It covers essential operations such as loading data into a DataFrame, exploring the dataset with descriptive statistics, manipulating indexes, sorting data, selecting specific columns and rows, and performing basic arithmetic operations on columns.

At this point you are ready to develop some code on your own. Work on the following challenge on your own, then compare your solution with the one provided.

You are tasked with developing a Python script that processes the penguins dataset from the following URL: https://raw.githubusercontent.com/thousandoaks/Python4DS-I/refs/heads/main/datasets/penguins.csv.The script should first load the dataset into a Pandas DataFrame and ensure the data is correctly imported for further analysis. Once loaded, you must implement functionality to sort the dataset in ascending order based on the body_mass_g column. Additionally, the script should compute the average values for key numerical attributes, including bill_length_mm, bill_depth_mm, flipper_length_mm, and body_mass_g.

import pandas as pd

# Step 1: Load the dataset from the given URL

url = 'https://raw.githubusercontent.com/thousandoaks/Python4DS-I/refs/heads/main/datasets/penguins.csv'

df = pd.read_csv(url)

print("Original DataFrame:")

display(df.head())

# Step 2: Sort the DataFrame by 'body_mass_g' in ascending order

df_sorted = df.sort_values(by='body_mass_g', ascending=True)

# Step 3: Compute the average of specified columns

columns_to_average = ["bill_length_mm", "bill_depth_mm", "flipper_length_mm", "body_mass_g"]

average_values = df_sorted[columns_to_average].mean()

# Print the results

print("Sorted DataFrame:")

display(df_sorted.head())

print("\nAverage values of selected columns:")

print(average_values)Original DataFrame:| id | species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | year | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | Adelie | Torgersen | 39.1 | 18.7 | 181.0 | 3750.0 | male | 2007 |

| 1 | 2 | Adelie | Torgersen | 39.5 | 17.4 | 186.0 | 3800.0 | female | 2007 |

| 2 | 3 | Adelie | Torgersen | 40.3 | 18.0 | 195.0 | 3250.0 | female | 2007 |

| 3 | 4 | Adelie | Torgersen | NaN | NaN | NaN | NaN | NaN | 2007 |

| 4 | 5 | Adelie | Torgersen | 36.7 | 19.3 | 193.0 | 3450.0 | female | 2007 |

Sorted DataFrame:| id | species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | year | |

|---|---|---|---|---|---|---|---|---|---|

| 314 | 315 | Chinstrap | Dream | 46.9 | 16.6 | 192.0 | 2700.0 | female | 2008 |

| 64 | 65 | Adelie | Biscoe | 36.4 | 17.1 | 184.0 | 2850.0 | female | 2008 |

| 58 | 59 | Adelie | Biscoe | 36.5 | 16.6 | 181.0 | 2850.0 | female | 2008 |

| 116 | 117 | Adelie | Torgersen | 38.6 | 17.0 | 188.0 | 2900.0 | female | 2009 |

| 98 | 99 | Adelie | Dream | 33.1 | 16.1 | 178.0 | 2900.0 | female | 2008 |

Average values of selected columns:

bill_length_mm 43.921930

bill_depth_mm 17.151170

flipper_length_mm 200.915205

body_mass_g 4201.754386

dtype: float64This chapter has introduced Pandas as a fundamental tool for data manipulation and analysis in Python, essential for modern data science workflows. Through its intuitive data structures, Series and DataFrame, Pandas provides a versatile platform for handling and analysing structured data. We explored various aspects of Pandas, from importing and cleaning datasets to performing complex data manipulations such as filtering, reshaping, and merging data. Additionally, Pandas offers efficient handling of missing values, powerful numerical operations, and seamless integration with other libraries, enhancing its utility for exploratory data analysis (EDA) and machine learning tasks.

The chapter emphasised how Pandas simplifies the process of transforming raw data into insights. By automating repetitive data wrangling tasks and providing tools for visualisation and statistical analysis, Pandas allows data scientists to focus more on deriving meaning from data rather than manual data preparation. For those beginning with data science or seeking to refine their analytical skills, mastering Pandas is an invaluable step. Moving forward, applying these techniques in real-world scenarios, as suggested in the practical exercises, will solidify your understanding and open up new possibilities for efficient, insightful data analysis.

In the next chapter you will learn how to group and aggregate DataFrames using Pandas.

Data scientists must be proficient in the use of the Pandas library to harness the complexity arising in industrial contexts and be able to have data that is ready for statistical analysis or AI-based inference. We strongly advice you to have a look at the following references:

If you want a good reference site: Official Pandas Reference

Gentle introduction to Pandas: 10 minutes to Pandas

Additional learning resources (Advanced): Python for Data Analysis